Better Data Modeling: 7 Differentiating Characteristics of Data Vault 2.0

Hard to believe that the 2nd Annual World Wide Data Vault Consortium (WWDVC15) is NEXT WEEK in beautiful Stowe Vermont. It promises to be an excellent event. The speakers include myself, Claudia Imhoff, Dan Linstedt (the inventor of Data Vault), Scott Ambler, Roelant Vos, Dirk Lerner and many more. The focus will be DV 2.0, agile data warehousing, big data, NoSQL, virtualization and automation. Check out the agenda here: http://wwdvc.com/schedule/

So in preparation (and to encourage you to attend), I thought it might be good to review some of the important basics about Data Vault 2.0 and why it is an important evolution for the data warehousing community.

The approach started out as the Common Foundational Warehouse Modeling Architecture as it’s official name. Then it was more commonly known as the “Data Vault” and became a modelling method for Data Warehouses. It also had a methodology with implementation guidelines and worked very, very well on relational platforms for many, many years (over 10 years for those who did not know).

But technology evolved. NoSQL architectures came into the picture primarily as sources. The Apache Hadoop platform started offering a cheaper storage and processing MPP architecture.

Data Vault evolved into Data Vault 2.0 and already has many successful implementations. The original Data Vault is now referred to as Data Vault 1.0 (or DV 1.0) and it primarily has a modelling focus. DV 2.0 on the other hand changes some things, and adds a LOT.

Data Vault 2.0 has the following 7 differing characteristics:

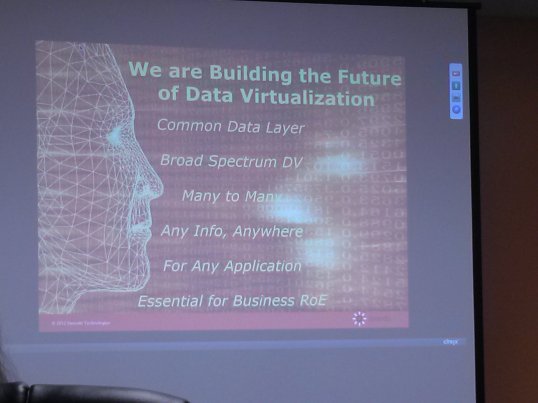

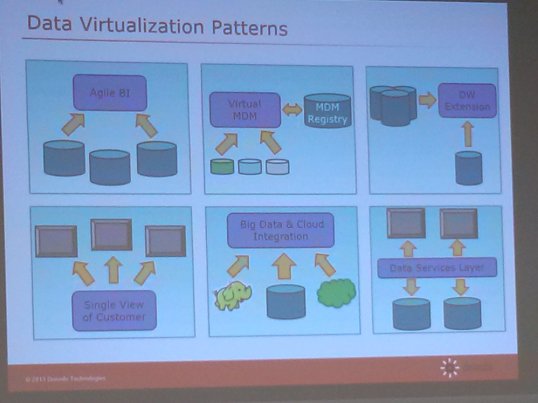

1. DV 2.0 is a complete system of Business Intelligence. It talks about everything from concept to delivery. While DV 1.0 had a major focus on modelling and many of the modelling concepts are similar, DV 2.0 goes a step further and talks about data from source to business user facing constructs with guidelines for implementation, agile, virtualization and more.

2. DV 2.0 can adapt to changes better than pretty much ANY other data warehouse architecture or framework. It can do it even better than DV 1.0 because of the change in design to adapt to NoSQL and MPP platforms, if needed. DV 2.0 has successfully been implemented on MPP RDBMS platforms like Teradata as well (ask Dan for details).

3. DV 2.0 is both “big data” and “NoSQL” ready. In fact, there are implementations where data is sourced in real-time from NoSQL databases with phenomenal success stories. One of these was presented at the WWDVC 2014 where an organization saved lots of money by using this architecture.

A near real-time case study for absorbing data from MongoDB is being presented at WWDVC2015. It’s not to be missed.

4. DV 2.0 takes advantage of MPP style platforms and is designed with MPP in mind. While DV 1.0 also did this to an extent, DV 2.0 takes it to a completely other level with a zero-dependency type architecture. Of course, there are a few caveats you will need to learn.

5. DV 2.0 lets you easily tie structured and multi-structured data together (logically) where you can join data across environments easily. This particular aspect lets you build your Data Warehouse on multiple platforms while using the most appropriate storage platform to the particular data set. It lets you build a truly distributed Data Warehouse.

6. DV 2.0 has a greater focus on agility with principles of Disciplined Agile Delivery (DAD) embedded in the architecture and approach. Again, being agile was certainly possible with DV 1.0, but it wasn’t a part of the methodology. DV 2.0 is not just “agile ready”, it’s completely agile.

7. DV 2.0 has a very strong focus on both automation and virtualization as much as possible. There are already a couple of automation tools in the market that have the Dan’s approval (just ask). Some of them will be at WWDVC15.

It’s real-time ready, cloud ready, NoSQL ready and big data friendly. And practitioners have already had success in all these areas (on real projects not just in the lab).

And, as you’ll notice on the agenda, the focus at WWDVC15 will be Data Vault 2.0 with examples of sourcing it from MongoDB, with examples of virtualization (from me!), with examples of design mods (also one from me), with examples of Hadoop implementations and more. It’s not something you want to miss, and there’s hardly any time or seats left.

If you are coming, I look forward to seeing you and chatting about the world of DW/BI and agile. If you want to attend, grab one of the last seats over at http://wwdvc.com/#tile_registration (if there are still seats left by the time you get this message).

See you soon!

Kent

The Data Warrior

P.S. After the conference, the next place you’ll hear about DV 2.0 is in Berlin. There is a bootcamp and certification starting on 16th June at Berlin, Germany. The details are here: http://www.doerffler.com/en/data-vault-training/data-vault-2-0-boot-camp-and-certification-berlin/

You must be logged in to post a comment.