ODTUG KScope: Day 5 – Happy Trails

Well the final day of KScope12 finally arrived and it was another hot one with the final sessions and the Texas heat. Another bright red sunrise greeted us as it has all week.

Today I managed to get a picture of the group that showed up for Chi Gung every day at 7 AM. We even had some new people today (officially the last day). They all enjoyed the sessions and learned (hopefully) enough to practice a bit once they return home.

I am grateful to all the participants for showing up early each morning with enthusiasm and a willingness to try something new. It made my job to lead them much easier. (There will be a You Tube video sometime next week for people to review, so stay tuned)

The first order of business for the day (after Chi Gung) was the official KScope closing session. Even though there were still two sessions to go afterward we had the closing at 9:45 AM. We were entertained, yet again, with some photo and video footage taken throughout the week, including one interview with me! We also learned who got the presenter awards for each track and for the entire event.

Then we all got beads to remind us to go to KScope13 in New Orleans.

Next was my final session for the event: Reverse Engineering (and Re-Engineering) an Existing Database with Oracle SQL Developer Data Modeler.

I had a surprising number of people for the last day after the closing session. I think there was about 70 people wanting to learn more about SDDM. Apparently most people are unaware of the features of the tool (which I have written about on several posts).

So, that was nice.

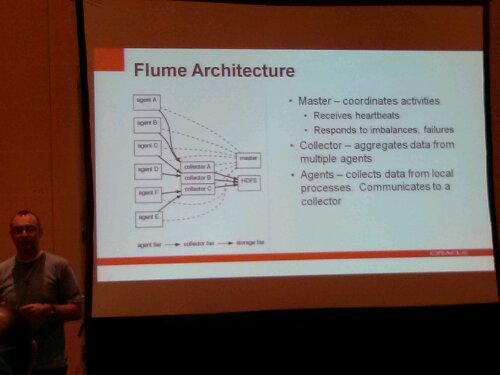

Finally I went to JP Dicjks talk about Big Data and Predicting the Future.

His basic premise is that we should now never throw away any data as it all can be used to extend the depth of analytics. We can react to events in real time and proactively change outcomes of those events.

The diagram above shows the basics of one way that data moves through the world and into the Hadoop file systems. I am oversimplifying but it is a cool diagram.

Part of the challenge is uncovering un-modeled data. I guess that is where the recent Oracle acquisition, Endeca, comes in with their Data Discovery tool (again oversimplifying) .

And that was pretty much it for the show. It was a great week with lots of learning and networking (and tweeting). We all had a good time and learned enough to make our heads explode.

I look forward to meeting folks again next year at KScope13 in New Orleans.

Kent

You must be logged in to post a comment.