Happiness is not the absence of conflict

Happiness is not the absence of conflict but the ability to cope with it

–Annon

The ability to cope with conflict is part of having a warrior spirit!

Have a great day!

Kent

The Oracle Data Warrior

Happiness is not the absence of conflict but the ability to cope with it

–Annon

The ability to cope with conflict is part of having a warrior spirit!

Have a great day!

Kent

The Oracle Data Warrior

For those that missed this year’s event, here is another little recap complete with links to all the relevant details.

Thanks to the folks at Oracle for giving me permission to re-blog this post! ( I apologize in advance for some of the funky formatting)

ORACLE OPENWORLD 2013 HIGHLIGHTS

|

|

| WE HOPE TO SEE YOU AT OOW 2014!! |

Words to live by – warrior or not:

‘Do nothing which is of no use.’ ~Miyamoto Musashi

— Leo Babauta (@zen_habits) November 9, 2013

This week I did a little travel and went to Durham, North Carolina to present at the 2013 East Coast Oracle Users Conference (aka ECO). While I have been aware of this event for over 20 years, it is the first time I have attended.

It was worth the trip. (Thanks to Jeff Smith at Oracle for alerting me to the event and encouraging me to submit). He actually sent me, Danny and Sarah (The EPM Queen). It was great to have members of the ODTUG clan together.

Overall a well run event held at the Sheraton Imperial Hotel and Conference Center. It drew over 300 attendees and a large list of Oracle ACE and ACE Directors were there to present to a crowd very eager to learn and network.

Our opening keynote from Steven Feuerstein (inventor of the PL/SQL Challenge) was a fun take on different types of therapy and how they might be applied to software developers.

His discussed the use of:

It was a fun, light way to start the conference with some very valuable advice.

Oracle ACE Director, author, and trainer Craig Shallahamer did two deep dive tuning sessions that I attended. In the first one, Introduction to Time-based Performance Analysis: Stop the Guessing, Craig gave us his four point framework for Holistic Performance Analysis. The points were:

With that he got into all sorts of v$ view stuff that went mostly over my head. Needless to say I will have to download the slides from his site (orapub.com) and give them to someone more attuned to this kind of tuning than I!

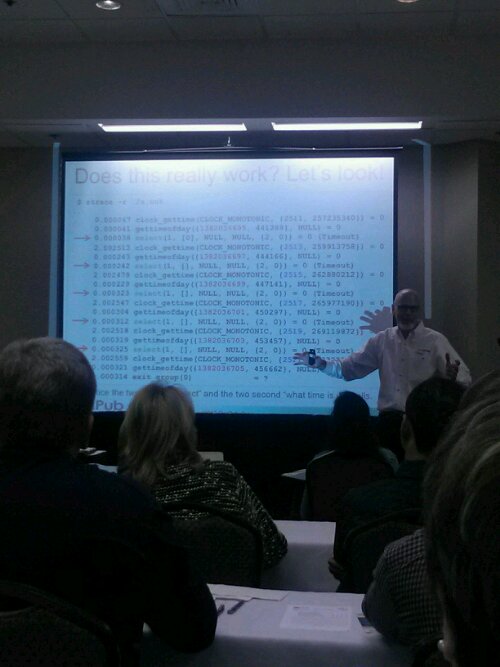

Oracle ACE Director, Craig Shallahamer discusses low level details for understanding Oracle CPU consumption

The second presentation Craig gave was called Understanding Oracle CPU Consumption: The Missing Link. Again lots of views and some Linux OS utilities (e.g., perf) and lots of numbers were displayed and discussed to try to ferret out how to determine what Oracle functions were actually taking up CPU time.

Even though I don’t really understand a lot of this (hey, I am a data modeler, not a dba right?) I like to go to sessions like this as I enjoy listening to smart people talk passionately about the things they do, and I figure I might retain just enough to point someone else in the right direction in the future, even if it is only to give them a copy of these slides!

ECO had one of the nicest little lunch buffets I have eaten in a while. Very simple southern food that included cole slaw, potato salad, baked chicken, fried chicken, pulled port (with N. Carolina bbq sauce), hush puppies and apple cobbler. (I did not say it was a light lunch right?)

I love all kinds of BBQ and the pulled pork did not disappoint. I do not usually like fried chicken but figured I should try it and was pleasantly surprised. Crisp and moist. Very nice.

I had the best turnout ever for this topic with over 40 people in the session most of whom were game to try my gamification of data model review sessions.

One of the tasks was to translate relationship sentences and model descriptions into Haiku (or another form). There were prizes as an incentive to play along.

The winner by general acclamation was Edie Waite from Raleigh, NC with this little limerick:

The data model we used had the entities: Country, Region, Employee, Locations, and a few others.

Another Haiku from Sarah Zumbrum (a noted non-data modeler) went like this:

Keynote today was about eBusiness suite stuff. I sat there after breakfast mostly not listening as I started to put this blog post together.

Then I did my 2nd talk.

I had a somewhat disappointing turnout (only 5 people, sigh) but it was a great exchange with those 5 people. We had a very good discussion about applying agile techniques to building a data warehouse and I was able to introduce them to some of the details of Data Vault Data Modeling. None of them knew much about data vault, but some had heard the term.

One attendee did tell me he was skeptical about the approach when he came in as he was a traditional Kimball dimensional data warehouse guy. But after the session he was willing to concede there was some merit and ideas he had not seen before and he was going to take those into consideration as he embarked on a new phase of his project where there were some complex problems to solve. He could see that data vault might just help.

Really can’t ask for more than that!

So my last session for the event was to attend Craig Warman’s talk on embedded analytics. It was a good discussion about how BI and analytics have evolved, Craig presented a simple maturity model as part of the talk:

Level 0: BI reporting and analytic applications are completely seperate from other applications Level 1: Gateway Analytics – Operational applications have a report tab or menu item to launch the BI reporting tool interface. Maybe there is a login pass through. Level 2: Inline Analytics – at this level, the analytics and BI tool has been incorporated into the operational application interface to the point it has the same look and feel and you can’t tell it is a separate product or tool. This where many organizations are today. Level 3: Infused Analytics – this is the goal. At this level the analytics are truly part of the application and provide core functionality. Examples of this are the recommendations you get on Amazon as you check out or the movie suggestions you get on Netflix based on your prior movie choices. If the analytic pieces were removed the application would not function correctly.Well that’s it for this conference.

Put ECO on your radar for 2014.

See you around.

Kent

P.S. Next conference on my agenda is RMOUG TD 2014. Let me know if you will be there.

One of the ongoing complaints about many data warehouse projects is that they take too long to delivery. This is one of the main reasons that many of us have tried to adopt methods and techniques (like SCRUM) from the agile software world to improve our ability to deliver data warehouse components more quickly.

So, what activity takes the bulk of development time in a data warehouse project?

Writing (and testing) the ETL code to move and transform the data can take up to 80% of the project resources and time.

So if we can eliminate, or at least curtail, some of the ETL work, we can deliver useful data to the end user faster.

One way to do that would be to virtualize the data marts.

For several years Dan Linstedt and I have discussed the idea of building virtual data marts on top of a Data Vault modeled EDW.

In the last few years I have floated the idea among the Oracle community. Fellow Oracle ACE Stewart Bryson and I even created a presentation this year (for #RMOUG and #KScope13) on how to do this using the Business Model (meta-layer) in OBIEE (It worked great!).

While doing this with a BI tool is one approach, I like to be able to prototype the solution first using Oracle views (that I build in SQL Developer Data Modeler of course).

The approach to modeling a Type 1 SCD this way is very straight forward.

How to do this easily for a Type 2 SCD has evaded me for years, until now.

So how to create a virtual type 2 dimension (that is “Kimball compliant” ) on a Data Vault when you have multiple Satellites on one Hub?

(NOTE: the next part assumes you understand Data Vault Data Modeling. if you don’t, start by reading my free white paper, but better still go buy the Data Vault book on LearnDataVault.com)

Build an insert only PIT (Point-in-Time) table that keeps history. This is sometimes referred to as a historicized PIT tables. (see the Super Charge book for an explanation of the types of PIT tables)

Add a surrogate Primary Key (PK) to the table. The PK of the PIT table will then serve as the PK for the virtual dimension. This meets the standard for classical star schema design to have a surrogate key on Type 2 SCDs.

To build the VSCD2 you now simply create a view that uses the PIT table to join the Hub and all the Satellites together. Here is an example:

Create view Dim2_Customer (Customer_key, Customer_Number, Customer_Name, Customer_Address, Load_DTS) as Select sat_pit.pit_seq, hub.customer_num, sat_1.name, sat_2.address, sat_pit.load_dts from HUB_CUST hub, SAT_CUST_PIT sat_pit, SAT_CUST_NAME sat_1, SAT_CUST_ADDR sat_2 where hub.CSID = sat_pit.CSID and hub.CSID = sat_1.CSID and hub.CSID = sat_2.CSID and sat_pit.NAME_LOAD_DTS = sat_1.LOAD_DTS and sat_pit.ADDRESS_LOAD_DTS = sat_2.LOAD_DTSThe main objection to this approach is that the virtual dimension will perform very poorly. While this may be true for very high volumes, or on poorly tuned or resourced databases, I maintain that with today’s evolving hardware appliances (e.g., Exadata, Exalogic) and the advent of in memory databases, these concerns will soon be a thing of the past.

UPDATE 26-May-2018 – Now 5 years later I have successfully done the above on Oracle. But now we also have Snowflake elastic cloud data warehouse where all the prior constraints are indeed eliminated. With Snowflake you can now easily chose to instantly add compute power if the view is too slow or do the work and processing to materialize the view. (end update)

Worst case, after you have validated the data with your users, you can always turn it into a materialized view or a physical table if you must.

So what do you think? Have you ever tried something like this? Let me know in the comments.

Get virtual, get agile!

Kent

The Data Warrior

P.S. I am giving a talk on Agile Data Warehouse Modeling at the East Coast Oracle Conference this week. If you are there, look me up and we can discuss this post in person!

You must be logged in to post a comment.