Last week I had the pleasure of spending a few days in lovely Stowe, Vermont at the Stoweflake Mountain Resort and Spa attending the 3rd Annual World Wide Data Vault Consortium (#WWDVC). Not only was the location picturesque, the weather was near perfect, the beer was tasty, and the learning and networking were outstanding.

We had 75 attendees coming from all over the world – Germany, Switzerland, Canada, England, Australia, New Zealand, The Netherlands, USA, Finland, and India. Quite a turnout!

Day 1- Data Vault Brainstorming

This year I arrived early enough to participate in what is arguably the best part of the event – a full day, open forum discussion with certified Data Vault modelers and practitioners, lead by the inventor of Data Vault, Dan Linstedt.

The brain power in the room was stunning. There were about 30 people in all and we all got to introduce ourselves and talk a bit about what we had been doing with Data Vault. It was great to hear the many and varied ways in which Data Vault is being used across multiple industries (including a US intelligence agency – but that is a secret). Everything from traditional data warehousing and BI, to realtime streaming IoT data, to virtual Data Vaults and virtualized information marts, to using Data Vault to help with Master Data Management (MDM). It was eye opening and exciting to hear all these applications and opportunities.

If you are not yet certified, get certified! Then you can attend this session at WWDVC 2017 (spoiler – at Stoweflake again!). And you are in luck as Dan just announced three classes later this year in St Albans, Vermont. Plus there are multiple classes coming up in Europe as well.

Day 2- Hands on Workshops

Another unprecedented day at WWDVC. The three platinum sponsors, AnalytixDS, Talend, and Varigence, all ran 3-hour hands on workshops. These were a fantastic opportunity to see how these vendors have really stepped up to the plate to support quickly building Data Vault solutions with their tools.

These were great sessions, led but highly qualified folks. They showcased some great solutions and answered a lot of questions.

All three sessions were standing room only – with over 35 attendees. (We had to drag in chairs from other rooms!)

Be sure to make time to attend these next year as I am sure they will be on the agenda again.

Day 3 – The Main Event Begins

Yes, all the way to Day 3 before the official kickoff with keynote and speakers.

Dan of course got us started with welcome, thanks to all the sponsors, and housekeeping. Nicely this event only has one room and one track so no one has to pick between sessions!

Keynote

The keynote this year was Swimming in the Data Lake by none other than the Father of Data Warehousing, Bill Inmon. I greatly enjoyed his somewhat irreverent look at our industry and his discussion on Big Data and the Data Lake concepts. It was quite a humorous talk (“I don’t mean to offend anybody, but….”). I would say it is one of the best talks I have ever heard Bill give over my 20+ years of knowing him (so I have heard a few).

And being a prolific author, Bill of course has a new book out on Data Lakes (available now on Amazon here).

Being good geeks, several of us did manage to get our picture taken with Mr. Inmon as “social evidence” that we know him (well, I actually did co-author a book with him back in the day).

Lots of Talks

Yes it was a full day with tons of stuff to fill our heads with ideas: new, useful and occasionally controversial. (stay tuned for videos on all these!)

Dan’s business partner, Sanjay Pande, came all the way from India to talk about Data Vault 2.0 on Hadoop. Roelant Vos came again from Australia to give us a business based view of a data vault project at his company (Allianz) about Customer Centric Analytics. Mary Mink and Sam Bendayan of Ultimate Software came for the 2nd year to talk about how their SaaS company is using Data Vault to provide customer value. This time they talked about their efforts to move to virtual information marts (very cool).

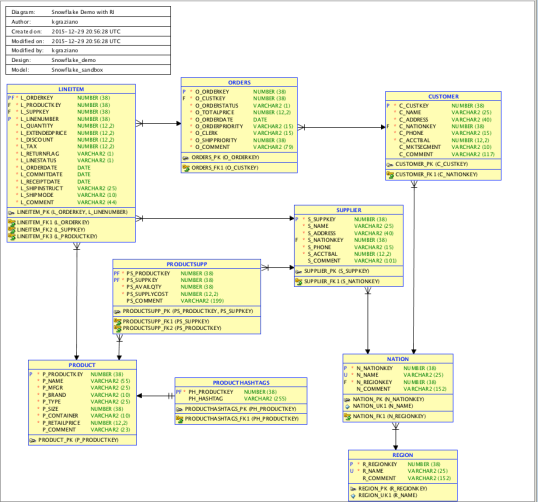

I did my presentation on Building a Virtualized ODS. This was a real life example from my consulting last year on doing an agile data warehouse project based on Data Vault architectural principles. It was a fun talk with lots of interaction. I love challenging the norm, then proving it works!

Of course I did have to do a little intro promo about my employer, Snowflake Computing. I am happy to say there was quite a bit of interest in our cloud-native, elastic data warehouse offering.

After my talk I did a drawing for a GoPro camera (courtesy of Snowflake). I am happy to say it went Russell Searle from Australia! This man loves Data Vault so much he has paid his own way to Vermont twice now to attend WWDVC. Now that is dedication!

Days 4 & 5

Sadly I had other commitments back in Texas and could not stay for these days (but did follow along a bit on twitter). If you want to see everything that happened, search Twitter for #WWDVC.

One fun thing on Day 4 was a few people got to go up in a tethered hot air balloon. Hopefully I can try that next year.

Other Fun Stuff

Of course not everything happens in the sessions. Lots of good networking and information exchange happens informally at these events. I did several impromptu demonstrations of Snowflake. The German and Australian contingents were quite interested and can’t wait until Snowflake is available in their regions.

Thanks to my friends Paul and Raphael at WhereScape for loaning me their big monitor!

I introduced a very international crowd to the best northern-style, southern BBQ at the Sunset Grille. We had good Data Vault, and non-DV, conversations along with finger licking ribs, brisket, and pulled pork (and beer of course).

Take Aways

Every year, as he closes out the event, Dan tries to summarize key learnings for everyone to take home. Here they are for WWDVC 2016:

Well that is it for this time around. With such a great event it is impossible to adequately cover everything but I hope this is enough to get you to put WWDVC 2017 on your event calendar. Ask for the time off now!

Safe travels to all the attendees. See you again soon.

Kent

The Data Warrior

Posted in

Big Data,

Data Modeling,

Data Vault,

Data Warehouse,

SnowflakeDB and tagged

#datavault,

#SnowflakeDB,

@dlinstedt,

@SnowflakeDB,

agile,

data model,

data model design,

Data Modeling,

Data Vault,

Data Warehouse,

data warehouse design,

data warehousing |

You must be logged in to post a comment.